A cop sprints down a brightly lit street, shouting into his radio as his body cam shakes violently. The suspect, a cat on an electric scooter, weaves through the Miami traffic at 25 miles an hour. The video was made with Sora 2, OpenAI’s new text-to-video generation model. “This is absolutely insane,” said my friend Luc, who created the video.

In the past 6 months, Luc has built over 15 channels that have garnered 6 million subscribers and over 250 million views per month. Still, I was surprised when he told me he had made $120K this month alone by uploading three AI-generated Reddit stories a day across 20 channels. “People can’t tell the difference anymore. I think the meta here is longer stories, like 40 to 50 (seconds) of just the most absurd (stuff) happening.”

That’s the world Sora 2 has created, and it’s safe to say media literacy is in trouble.

First Impressions:

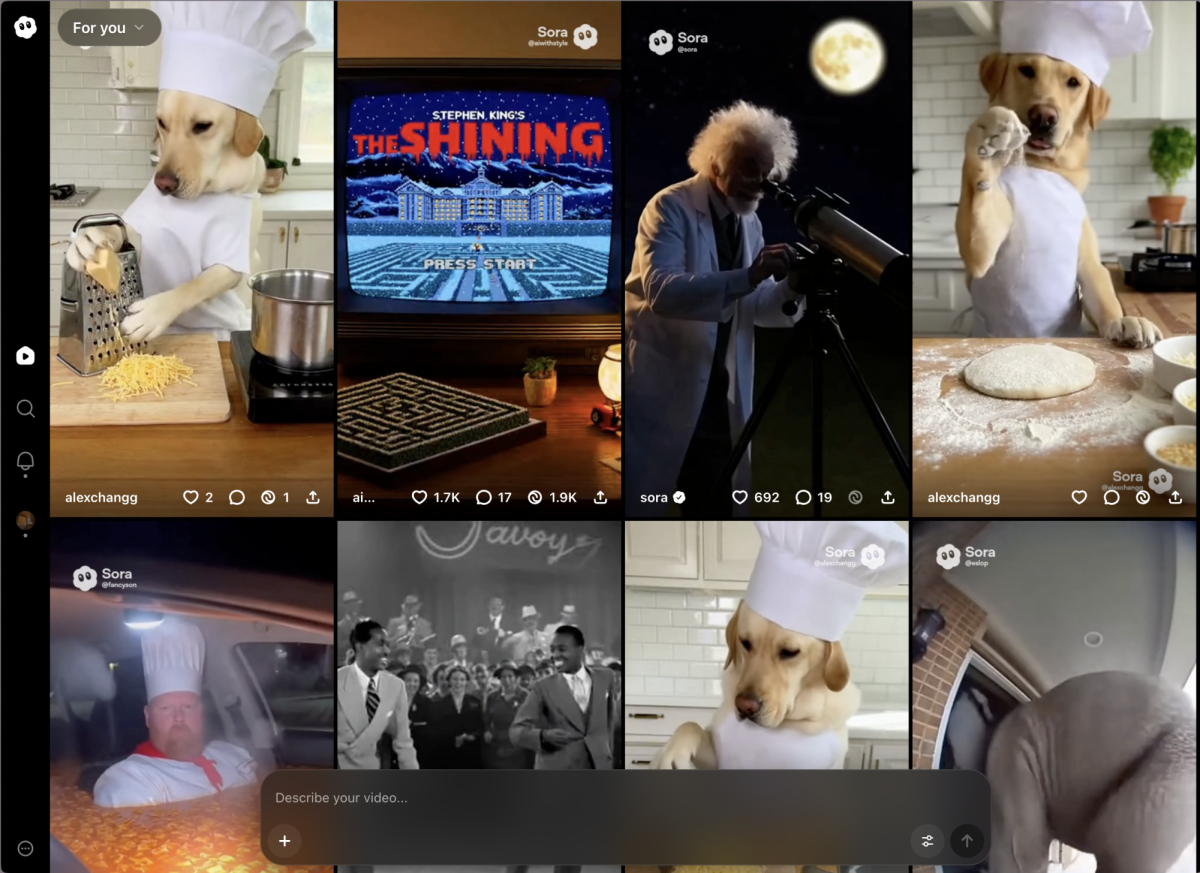

Go to Sora, type a few sentences and use a watermark remover. That’s all it takes to create a realistic video of anything. As of now, Sora 2 is free for anyone with a Google Account, and Sora 2’s Pro plan is included at no additional cost for ChatGPT Pro users with a $20/month subscription.

Sora 2 is revolutionary. Its progress is monumental compared to its predecessor, Sora 1, with fewer mistakes and a major leap forward in creating sophisticated background soundscapes, speech and sound effects with uncanny realism.

Within hours of release, TikTok was filled with Sora-made “AI slop” of fake police bodycam footage, ring doorbell videos that never happened and short clips of Jake Paul putting on makeup. Thousands of shorts, reels, and tiktoks appeared online, each using approximately 100 watt-hours (wH) of energy — the equivalent of running a computer for two to three hours — raising early questions about Sora 2’s environmental impact. Although every video technically contains a watermark, it’s often faint and easily removed on some websites, making it more difficult for viewers to tell whether what they’re seeing is real.

My Experience:

The first time I used Sora 2, I was shocked at how much better AI video had gotten in just two years. I instantly thought of a viral clip in 2023 of Will Smith eating spaghetti. Back then, people mocked the video because it was so distorted and unrealistic. Now, the clip acts as a common benchmark for how bad AI video quality can be. To properly compare the past and present, I generated that exact clip for my first test — you can watch my result in the embed on the side of the screen. The new video was amazing. For a content creator like me, it was terrifying to see that people could now create a full-length short film with minimal resources and incredibly fast turnaround.

When I tried running prompts to create an anime style video, a message appeared on screen that read, This content may violate our guardrails around third-party likeness. I was relieved to see that OpenAI had made efforts to ensure privacy for all users.

OpenAI has also blocked depictions of public figures, with the exception of certain public figures such as Jake Paul who consented to AI videos being made of him. Despite this, many users have bypassed this restriction through simply describing the celebrity by appearance and not stating their actual name.

According to OpenAI/The Sora Team, The company/OpenAI aims to place users in control of their likeness end-to-end with Sora.

“We have guardrails intended to ensure that audio and image likeness are used with the user’s consent, via cameos,” the article reads.

By comparison, models like Google VEO 3.1 do not enforce the same strict guidelines, allowing greater freedom in generating videos of celebrities.

My Experience As a Sora Creator:

As a content creator who’s been creating short-form videos for several years, I’m extremely grateful to have reached over 200 million total views and 270,000 subscribers on my main channel. I’m constantly looking for new trends and opportunities on YouTube. In early October, I came across a growing wave of YouTube Shorts using AI-generated clips of surreal moments like a tornado blowing a man away. Curious about how Sora videos might connect with audiences, I decided to start a new channel to experiment with the idea.

Within six days, the channel reached 200,000 views and gained over 5,000 subscribers, according to YouTube Studio. The average watch time was notably higher than on my previous channels, and many comments included things like “sora ai” or “ai”, showing genuine engagement. Every video included a clear AI disclaimer in both the caption and description.

Main Issues

Sora 2 arrived before society was ready for it. With sites like viewmax.io able to remove watermarks, anyone can slap a Sora watermark onto a real video to make it seem fake, or strip the watermark off a fake video to make it seem real. That symmetry fuels misinformation, propaganda and erodes trust in anything we see online. The Guardian has already reported waves of violent and racist content flooding the app’s feed, proving that OpenAI’s guardrails remain porous at best.

At the end of the day, Sora 2 represents both the promise and dangers of AI creativity. It’s capable of reshaping how stories are told, but also how lies are believed. Unless society learns to adapt as fast as the technology evolves, we risk losing our shared sense of truth altogether.